Open the new file and change the error level from INFO to ERROR for log4j.rootCategory. Optional: open the C:\spark-2.2.0-bin-hadoop2.7\conf folder, and make sure “File Name Extensions” is checked in the “view” tab of Windows Explorer. tgs file downloaded.Įxtract Spark archive to C drive, such as C:\spark-2.2.0-bin-hadoop2.7.

#How to download spark and scala install

If neccessary, download and install WinRAR from, so you can extract the. You might need to choose corresponding package type.

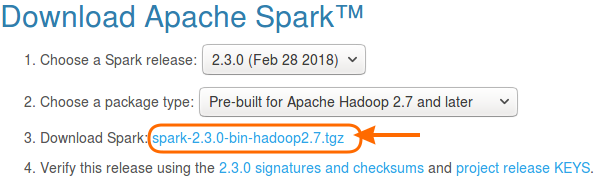

You can download older version from the drop down list, but note that before 2.0.0, Spark was pre-built for Apache Hadoop 2.6. The version I downloaded is 2.2.0, which is newest version avialable at the time of this post is written. Java HotSpot(TM) 64-Bit Server VM (build 25.161-b12, mixed mode)ĭownload a pre-built version of Apache Spark from Spark Download page. Java(TM) SE Runtime Environment (build 1.8.0_161-b12) Value: C:\Program Files\Java\jdk1.8.0_161 (or your installation path)Īfter the installation, you can run following command to check if Java is installed correctly:.Java has to be installed on the machines on which you are about to run Spark job.ĭownload Java JDK from the Oracle download page and keep track of where you installed it (e.g. Spark itslef is written in Scala, and runs on the Java Virtual Machine(JVM). In this post, I will walk through the stpes of setting up Spark in a standalone mode on Windows 10. In addition, the standalone mode can also be used in real-world scenarios to perform parallel computating across multiple cores on a single computer. Programs written and tested locally can be run on a cluster with just a few additional steps.

#How to download spark and scala driver

Driver runs inside an application master process which is managed by YARN on the cluster and work nodes run on different data nodes.Īs Spark’s local mode is fully compatible with cluster modes, thus the local mode is very useful for prototyping, developing, debugging, and testing.

0 kommentar(er)

0 kommentar(er)